On Sunday morning Dan O’Dowd, the CEO of “Green Hills Software“, took out a full-page ad in the New York Times to declare war on Tesla Autopilot. The ad is full of misinformation, misconceptions, and dishonest arguments and it was funded by Tesla’s competitors. Though it’s silly, I wanted to take a few minutes to respond to “The Dawn Project” ad line by line because what Dan O’Dowd and Green Hills Software are advocating would put my life at risk, put the lives of my friends at risk, and put the lives of the public at risk.

The ad begins:

Making Computers Safe for Humanity

THE DAWN PROJECT

What exactly is the “Dawn Project”? The ad would have you believe it is some sort of charitable endeavor, focused on the noble cause of “making computers safe for humanity”. The reality is that Dan O’Dowd chose to publish the ad as the “Dawn Project” to mask its connection to O’Dowd’s privately owned software firm, Green Hills Software.

Let’s take a look at the Internet Archive. Just a few weeks ago on Christmas Eve 2021, this is what The Dawn Project website looked like. No mention of Tesla, just an ugly site that looked like it was from the 90s with a snake-oil pitch about replacing “all the software that runs the world with software that never fails and can’t be hacked”. This is a claim so patently absurd that even the website had to admit that top security experts would consider The Dawn Project’s goals “a fantasy” or even “a scam”.

In the last couple of weeks, the Dawn Project has paid for its website to be completely redesigned. It now looks like this:

The site has now been completely redesigned at considerable expense, and the $10,000 Tesla prize is front and center at the top of the home page. Below that, a reference to the Tesla campaign is featured for the first time. The website was completely redone in the last two weeks specifically for the Tesla campaign. The “Dawn Project” is not some longstanding organization fighting for “making computers safe for humanity”. It is a front organization hastily set up in the last few weeks to smear Tesla Autopilot while masking the source of the campaign: Green Hills Software, a long-time supplier to Tesla’s competitors.

Besides the cost of the redesigned website and the $10k prize, a full-page color ad in the New York Times is estimated to cost anywhere from $150,000 – $250,000. This is a multi-million dollar campaign focused squarely on taking away life-saving technology from people who depend on it. But why did Green Hills Software try to hide its identity with a front organization? One look at their aging homepage provides some answers:

The ugly and outdated website says a lot about the company’s level of software and design expertise, but the news highlights and header speak even more loudly. You can see that on January 5, Green Hills announced its technologies would be used in the 2022 BMW iX — an electric SUV in direct competition with Tesla. What a coincidence, that’s around the same time the new Dawn Project website was redesigned! The press release confirms that “Several leading Tier 1 suppliers chose Green Hills technologies and expertise to build, certify and deploy their technologically advanced cockpit safety and driver convenience software across many of the electronic control units in the BMW iX vehicle”. In other words the suppliers of ADAS systems used in BMW vehicles, MobilEye and / or Qualcomm, are using Green Hills Software. The press release called the BMW iX “another success story for Green Hills in its nearly 30-year automotive history in which its technology is used by all 12 of the top 12 global Tier 1 suppliers and dozens of automotive brands”.

Green Hills Software isn’t just supplying one of Tesla’s competitors. They claim to be supplying all of them. No wonder they tried to hide who was really behind the ad! The very first line of the ad is a bold faced lie, a deception to readers about the identity of the author. If Dan O’Dowd had any integrity, he would disclose his financial interests from decades of involvement in automotive.

We did not sign up our families to be crash test dummies for thousands of Tesla cars being driven on the public roads by the worst software ever sold by a Fortune 500 company.

Given that Green Hills Automotive division is starting to feel threatened by Tesla, this hyperbolic statement comes as no surprise. The declaration that Autopilot is “the worst software ever sold by a Fortune 500 company” is completely subjective hyperbole, with zero substantiating evidence. Indeed, Green Hills had admitted that it is a key partner of automotive suppliers like MobilEye who sell ADAS systems that are far inferior to Tesla Autopilot. For example, the BMW iX ADAS system will charge straight through a red light causing a collision unless the driver manually disengages. Tesla’s Full Self-Driving Beta software, on the other hand, is designed to stop automatically — and it works amazingly well! What’s more, if you’re driving manually and don’t notice that you’re about to run a red light, the same traffic light detection capabilities will sound an alarm to warn you so you can prevent a collision… yes, even when Autopilot is completely off and the FSD package was never paid for! If FSD is really “the worst software ever”, what does that make ADAS solutions powered by Green Hills technology that are far less advanced?

Besides, is that really the worst software you can think of? Did we already forget about this?

Within the span of five months, 346 people were killed in two crashes involving Boeing 737 Max planes: first off the coast of Indonesia in October 2018 and then in Ethiopia in March 2019

PBS

Boeing is ranked 54th on the Fortune 500. Does Green Hills Software seriously believe that a piece of software that took the lives of 346 people is not as bad as FSD Beta, which has not even caused a single accident, let alone killed someone?

Perhaps the reason Dan O’Dowd is so keen to forget Boeing’s software troubles is that his company was responsible for providing Boeing an operating system:

Yup, that’s the same Boeing 787 that had issues with hacking and major electrical failures half a decade ago.

US aviation authority: Boeing 787 bug could cause ‘loss of control’

The US air safety authority has issued a warning and maintenance order over a software bug that causes a complete electric shutdown of Boeing’s 787 and potentially “loss of control” of the aircraft.

In the latest of a long line of problems plaguing Boeing’s 787 Dreamliner, which saw the company’s fleet grounded over battery issues and concerns raised over possible hacking vulnerabilities, the new software bug was found in plane’s generator-control units.

The FAA considered the situation critical and issued the new rule without allowing time for comment. Boeing is working on a software upgrade for the control units that should rectify the bug.

The plane’s electrical generators fall into a failsafe mode if kept continuously powered on for 248 days. The 787 has four such main generator-control units that, if powered on at the same time, could fail simultaneously and cause a complete electrical shutdown.

The Guardian

So much for software that can’t be hacked and has never failed. In the case of the 787 O’Dowd proudly touted on Twitter, the plane would fail if left on for 248 days like clockwork. And don’t get me started on all the problems, delays, and cost overruns with F-35. You do not want to sign up as a crash test dummy for planes powered by Green Hills Software!

But of course, we don’t get to vet the software Green Hills puts in our planes and in our cars. We don’t get to “sign up” to share the road with drunk drivers and drivers who are drowsy. We don’t get to watch over every DMV driving test. Companies have been testing automated vehicles with safety drivers for more than a decade now. Tesla has been selling Autopilot since 2014, and millions — not thousands — of cars are running it around the world. Why is O’Dowd just getting upset now? It’s simple: They’re just now getting scared. If Tesla succeeds with Autopilot and FSD, Green Hills and their customers stand to lose a lot of money. So much money that’s it’s well worth it to spend millions just to decrease the chance it happens.

Finally, the implication that a Tesla running Autopilot in your neighborhood makes you a “crash test dummy” is completely backwards. A test dummy is used in a crash test to ensure the risk of injury is minimized. Software like Autopilot, FSD Beta, and Tesla active safety are designed to prevent pedestrians from getting hit. In other words, Tesla is trying to stop you from becoming a crash test dummy, not turn you into one. In fact, hundreds of times a day a car that was about to hit a pedestrian is stopped by Tesla Autopilot — both in manual driving and on Autopilot.

Take a look at the video above to get a sense of what we’re talking about here. Tesla Autopilot helps your car understand the world around it so it can stop things like this from happening. I want you to think about someone you love, and how you would feel if they were hit by a car. I want you to think about how it would feel to lose them. Look beyond the statistics and you’ll see lives destroyed: Not just the victims’ but their families as well. What kind of sick person would try and take this technology away from us? The development of this technology, which is included free on every car, is funded by people who chose to buy Tesla’s FSD package.

In this next video of a Tesla on FSD Beta, look at how human drivers treat the pedestrians crossing the street compared to how respectful the computer is. Is it too much to ask to want to cross the street without feeling like a car is about to hit you?

Still believe that Tesla turns you into a “crash test dummy”? Check out this video of FSD Beta navigating pedestrians, cyclists, joggers, buses and more on a narrow road with no lane lines. Keep in mind, this is pre-release software that hasn’t even been released publicly to all customers yet. Although it’s still in the early testing stages, it’s clear how incredibly powerful it’s going to be once fully rolled out in production.

In the time since the FSD Beta program started in 2020, 1.6 million people have died in car crashes around the world. 62 million people who survived were injured or permanently disabled. More had their day or their finances ruined. Of those people 49,600 Americans died. And guess who is paying the bill? You are. You get higher car insurance bills, medical insurance bills, and traffic tickets because of this ongoing disaster. This problem was not caused by FSD Beta. It was caused by ordinary cars, many of which were running Green Hills Software!

According to Green Hills own website, “hundreds of millions of vehicles contain software created with Green Hills Platforms for Automotive”. That’s a lot of vehicles, spanning every brand from Ford, GM and Toyota to Lexus and BMW. For 30 years, millions of people have died because of Green Hills sheer incompetence and failure to bring automated vehicle technology to market. Now they want to stop the one company that is actually making real progress, shipping real software to real customers? I wonder how these people sleep at night, knowing that 39 million people have died in car crashes since they became automotive suppliers and they’ve been more focused on profiting from the crisis than solving it. Imagine the lack of conscience required to not only feel no remorse but to go out and try and destroy the competing solutions that people are using and enjoying. Competing solutions that are already preventing crashes every single hour of every single day.

The Dawn Project is organizing the opposition to Elon Musk’s ill-advised Full Self-Driving robot car expiriment.

The key phrase here is “organizing the opposition”. This full-page ad is not a one-off thing. Green Hills and O’Dowd are launching a multi-million dollar campaign with the goal of destroying Tesla Autopilot to save their customers. Since their automotive customers don’t want the bad press of opposing life saving technology with misinformation to save a few bucks, the Dawn Project was created as a front to “organize” all of the opposing companies who are threatened by FSD. They will provide instructions for coordinated action to journalists, short-sellers, legacy auto OEMs and more. Indeed, a “fact check” for the New York Times ad links to a website from TSLAQ, an anti-Tesla hate group created by Tesla short-sellers. This indicates TSLAQ is already involved in this effort, and / or that the Dawn Project has been consuming TSLAQ propaganda.

The problem with the “Tesla Deaths” website is that if someone drives their Tesla off a cliff it will be listed on the website even if it had nothing to do with Tesla. Even if no car would have survived falling off the cliff, it goes on the site. If someone is speeding and crashes into a tree, same thing. Tesla’s FSD beta has not only never killed a single person, it hasn’t even been involved in a car crash to date. The fact that this short-seller propaganda has been cited in a “fact check” tells you everything you need to know about the lack of rigor in this analysis. This ad was guided by emotion, with no hard data to back it up.

Tesla Full Self-Driving must be removed from our roads until it has 1000 times fewer critical malfunctions.

Many uneducated observers might agree with this statement on the surface. After all, isn’t fewer “critical malfunctions” a good thing? The reality is that complacency makes the question of when to launch a driver assistance system more complicated than that.

First, “critical malfunctions” is an overly dramatic term for something ADAS users do every day: disengage! A disengagement could be because you’re about to crash, it could be because the car is doing something you don’t like (but is perfectly valid), or it could just be because you want to drive yourself. Presumably, this analysis only looked at safety-related disengagements, but ADAS users have been disengaging their systems to prevent collisions for over a decade now.

The important thing to understand is that these are driver assistance systems, not self-driving cars! The name of the Full Self-Driving package may confuse some people who aren’t familiar with it, but it is just a driver assistance system not unlike a much more advanced version of cruise control. Ultimately the driver is still in control of the car, and is still taking responsibility for driving themselves. Thus these “malfunctions” are not catastrophes that will inevitably cause accidents and deaths: Driver Assistance systems are designed with the expectation that disengagements are normal and expected. That’s why they make it easy for drivers to take control, and monitor driver attention to ensure nobody goes on their phone and forgets to watch the road. If FSD was “removed from our roads” many more car crashes would occur… all to avoid disengagements that ADAS users are handling routinely without causing any accidents.

Most importantly, you have to look at complacency. Let’s say that FSD Beta really tries to cause a collision every 36 minutes, as O’Dowd and Green Hills claim. Well, if you improved that 1,000 times you would get a situation where FSD Beta tries to cause a collision every 36,000 minutes or roughly every 25 days. Would that be more safe, resulting in fewer crashes? Or would it actually cause more?

When you have to watch your ADAS system closely and disengage every 36 minutes or so, you’re going to stay fairly alert. People don’t want to get into collisions. When something happens often, you’re going to be ready for it. FSD Beta users all over the country are being trained to watch the software and disengage as needed by experience. Their survival instinct is guiding them to stay vigilant. That’s why there have been 0 crashes on FSD Beta, and millions on Green Hills cars. If you got FSD Beta and it worked so well that it could drive 25 days without crashing… but then on the 25th day it would crash… you probably wouldn’t be able to stop it. Your expectations were built over weeks that the software would function perfectly until it didn’t. It may not be obvious, but training people to disengage and spread the word amongst themselves about issues helps make sure the human failsafe is an expert in handling the system.

When an ADAS is ready to be released is certainly debatable, but if we were to take FSD Beta off the road until it was 1000 times better I’m certain the result would actually be more car crashes. Once again, O’Dowd and Green Hills software prove themselves to be dangerous ignoramuses putting the public at risk to save their dying automotive business.

Besides, FSD Beta hasn’t even been released to most Tesla customers. A small limited beta program active only in the United States grants access to only the safest drivers who score the highest on a driving assessment. If FSD Beta is not allowed on the road, how will it get 1,000 times better? The project would stall, which is exactly what the competition wants. Obviously, some level of testing on the road would be needed to quantify whether any improvement had occurred at all. Real customers that are spread out naturally all around the country provide real-world feedback that’s essential to being able to one day launch the software to the whole fleet with confidence.

The right time to launch driving automation is yesterday. We cannot continue to do nothing and let people die like Green Hills Software has for decades. Taking the software off the road until it’s “1000 times better” would result in more crashes, more injuries, and more deaths. It’s not just a dishonest suggestion — it’s evil, putting corporate profits over the lives of people you love.

Self-driving software must be the best of software, not the worst.

Again, this is a completely subjective statement that has no meaning or merit. We’ve already established that Green Hills Automotive software is far worse than Tesla’s, and that its aerospace software has put lives at risk.

Tesla Autopilot and FSD are the best and most advanced driver assistance systems available in the world. Companies like Green Hills Software and their legacy auto customers can’t even come close. If they could, they’d be spending millions of dollars on putting out their own version of FSD Beta instead of trying to stop those who are accomplishing what they’re incapable of.

How about it Green Hills? If you’re so sure that FSD is “the worst software ever”, why can’t you put out something better? You better get to it, or people might start to call you liars and hypocrites.

The Dawn Project has analyzed many hours of YouTube videos made by users of Tesla’s Full Self-Driving. Our conclusions based on videos posted online are as follows:

Hold up. Wait a minute… Are you trying to tell me that this entire ad is based on your opinions formed watching YouTube videos? I’m not sure whether this is a joke or Dan O’Dowd is going senile.

Obviously, you can’t conduct a serious analysis from a handful of YouTube videos. You could maybe do a rough count of disengagements for fun, but numbers would be skewed dramatically by which videos were chosen.

What kind of videos did the uploader choose to publish? What kind of editing was involved? Were the videos evenly distributed across different times of day? What kind of neighborhoods were the tests conducted in? Did you observe normal people doing their routine drives, or people picking extra challenging scenarios to interest online viewers? Nobody wants to watch a video of FSD just driving straight, but that’s what most of driving is! People change their usage patterns in other ways when being watched by the camera too. Normally, people will disengage frequently at their discretion when they’re not happy with how the software is performing. When you’re making a video for YouTube about the software, those patterns change because you’re trying to show off the software to the internet. They’re not there to watch you drive yourself. In short, no definitive conclusions can be drawn from watching these videos and each of the bullet points following this shocking revelation is complete nonsense.

What’s more, Dan O’Dowd and Green Hills Software give very little indication of which videos they watched at all. If they provided a list of videos, anybody could go and double-check their numbers to see if they’re being dishonest or not. If they were being honest, it would be in their interest to publish the list of videos. The fact that they did not says a lot. Surely they didn’t watch any Whole Mars FSD videos…

The only information given about their methodology is as follows:

Amazingly, this entire “analysis” was conducted using only 21 YouTube videos. Whole Mars Catalog alone has published over 327 videos of FSD Beta driving, which means there are thousands of videos across the internet. Why they chose to watch only 21 videos that were 20 minutes long on average is beyond me. This sample size is far too small to draw any conclusions with statistical significance. In most cases, only 2 videos were analyzed for each version of the software. Two! Again, this was all done watching YouTube without any effort to actually get in a car and try the software. Even steps like removing videos based on their title can skew the data.

More importantly, the analysis was conducted using out-of-date versions of Tesla’s FSD Beta software. At the time of the publication of this ad, the latest FSD Beta version released was 10.9. That means at least 7 updates were released since the analysis was conducted. FSD Beta users can tell you that the software has improved dramatically in that time frame. The software tested was roughly 12 – 20 weeks old… months out of date. What’s more, only 11% of the videos analyzed were of the latest version of the software at the time of testing.

In short, this supposed “analysis” is a crock of shit.

This last part of the “analysis” is pretty amusing. Even a campaign to have FSD “removed from the road” admits that between FSD Beta 8 and FSD Beta 10 more than half of all issues were resolved. This is the most negative, biased, and selective analysis they could come up with, and they still had to admit the potential collision rate decreased by 58%!

They claim that at this rate it will take 7.8 years until FSD reaches the level of a human driver. Again, Dan O’Dowd has accidentally revealed he’s bullish. Of course, we all know that the improvements to FSD Beta are not linear, they’re exponential. FSD Beta won’t just keep improving at the same rate, innovations like Dojo and auto labeling will actually increase the rate of improvement. Even in this worst case linear extrapolation, detractors still admit that in less than 8 years FSD will be ready for driverless operation! That is a stunning admission, and reveals why they’ve suddenly become so insecure. Even people who think FSD should be pulled off the road have to admit that by the 2030 it will surpass humans. We’ve come a long way from people saying autonomy is impossible.

If Full Self-Driving was fully self-driving every car, millions would die every day

Unsurprisingly, there is zero evidence to support this outlandish statement. Zero people have died using FSD Beta. There have been zero car crashes. To say millions of people are going to die every single day is pure fear-mongering. Why would people not stay home after the first million people died? Why do they keep getting in the cars? Can they not see that the streets are filled with millions of bodies and crashed cars?

Nobody is putting FSD Beta in driverless mode today, especially not months old versions of the software that have already been wiped off the face of the Earth. Not only is this conclusion nonsensical, real data suggests the opposite is true. Yes, that’s right real data — not some boomer watching two YouTube videos.

Here’s the latest data from Tesla’s Vehicle Safety Report. The green line shows the distance an average car in the United States travels before a crash — around 484,000 miles as per NHTSA’s latest report. In Q3 2021 Autopilot traveled around 4.97 million miles before a crash. That’s more than 10 times further than your average car. In Q4 that fell to 4.31 million miles due to seasonality in the data caused by less light, harsher weather, icy roads, etc. (The previous year was 3.45 million miles). Even Teslas without Autopilot went 1.59 million miles between crashes.

That means a Tesla without Autopilot is about 3 times safer than your average car, and a Tesla that is driving on Autopilot crashes about 10 times less than your average car when under human supervision. If everyone in the world was using Tesla Autopilot or FSD to drive and drove the same number of miles, the number of crashes would be reduced by at least 90%, and continue to fall as the software improved.

With 1.3 million deaths around the world every year from car crashes, 1.17 million people would have their lives saved annually. 50 million injuries would fall by 45 million a year. 40,000 Americans murdered on the road every year would be reduced by 36,000 annually. You really have to wonder what kind of sick, sadistic person would so callously disregard human life that they’d take out a full page ad in the New York Times saying that a piece of software that could save over a million lives a year if every car had it would kill “millions of people a day“. My guess is that O’Dowd knows his millions of deaths a day claim is nonsense and that nobody has died, but he’s lying in public anyway to please paying customers like MobilEye and Ford. Some people are so sick that corporate profits matter more to them than human lives.

– About every 8 minutes, Full Self-Driving malfunctions and commits a Critical Driving Error, as defined by the California DMV Driving Performance Evaluation

– About every 36 minutes, Tesla Full Self-Driving commits an unforced error that if not corrected by a human would likely cause a collision

We’ve already shown that these numbers are based on an out of date and flawed analysis, but even that pessimistic analysis had to admit the improvements we’re seeing are dramatic. Recall that only 2 videos were watched for the latest version of the software at the time analysis, 10.3.1. Just imagine how much better 10.9 has gotten since then.

Unassisted, Full Self-Driving can’t reliably drive for one day without crashing, but human drivers drive many years between crashes

Again we see a deceptive argument designed to confuse people who don’t understand which Full Self-Driving features have shipped today. None of the features available today make your car driverless. FSD will never drive unassisted because it’s impossible to do so. It can only be used as a driver assistance system.

This latest lie makes it sound like FSD Beta is crashing every day. I’ll remind you once again that to date it has never crashed. They deceive the reader subtly by using the word “unassisted”, alluding to a mode of operation that just doesn’t exist on Tesla vehicles today. Yes, it’s true that FSD Beta would inevitably crash if running driverlessly… which is why that’s not allowed! Once the software has surpassed human level (which will happen faster than you think), that functionality will be enabled. For as long as it can’t drive as well as a human, driverless won’t be enabled. Is Dan O’Dowd really such a moron that he can’t understand this, or is being paid to make dishonest and deceptive arguments to help save his customers?

Humans are thousands of times better at driving than Tesla Full-Self Driving

Another baseless statement based on a flawed and outdated analysis. As the graph above shows, humans driving with the assistance of Autopilot can drive 10 times as far without crashing. While humans have many advantages, like common sense, software has many advantages too. Software can look in all directions at the same time and move over for a cyclist or motorcyclist you didn’t even see yet. Software doesn’t get tired or distracted. Already today, it often notices things I miss, like an electric scooter speeding through a four way stop sign late at night.

While software has not yet exceeded human capabilities in every aspect, it’s getting better fast and will overtake the world’s best drivers soon. Already today, the safest driver is a combination of both human judgement and software assistance.

The Dawn Project is offering $10,000 to the first person who can name another commerical product from a Fortune 500 company that has a critical malfunction every 8 minutes.

That’s an easy one. I plan to make a video comparing other ADAS systems (preferably one using Green Hills technology) with Tesla FSD Beta. What the video will show is that other ADAS systems require disengagement far more frequently than FSD Beta, for simple things like curved roads that FSD Beta handles with ease. Something tells me that a company this dishonest won’t stay true to their word and honor the prize though. If they do give me $10,000, I will use it to help fund the purchase of FSD for one reader.

“FSD Must Be Destroyed”

The ad ends with the hashtag “#FSDDelendaEst”. This is a dorky historical reference to “Carthago delenda est” a Latin phrase which translates roughly to “Carthage must be destroyed”. Carthage was a rival city to Rome that fought a number of wars against the Italian city-state before the city was destroyed and its surviving inhabitants were sold into slavery. Wikipedia provides a refresher for those who slept through history class:

After its defeat, Carthage ceased to be a threat to Rome and was reduced to a small territory that was equivalent to what is now northeastern Tunisia.

However, Cato the Censor visited Carthage in 152 BC as a member of a senatorial embassy, which was sent to arbitrate a conflict between the Punic city and Massinissa, the king of Numidia. Cato, a veteran of the Second Punic War, was shocked by Carthage’s wealth, which he considered dangerous for Rome. He then relentlessly called for its destruction and ended all of his speeches with the phrase, even when the debate was on a completely different matter

Wikipedia

Ending the ad with this phrase was no accident. Like Cato before him, Dan O’Dowd looked upon Tesla and was shocked by their wealth and their technology. He concluded that what was happening with FSD was dangerous for Green Hills Software and Legacy Auto. And now, he plans to relentlessly call for its destruction to save himself.

The Campaign Against Linux

This isn’t the first time Green Hills Software has launched a smear campaign against a competing system. As Linux began to eat away at sales for their crappy operating system in the early 2000s, they launched a similar campaign calling Linux “an urgent threat to national security”.

Of course, we now know that this was complete bullshit. Linux wasn’t a threat to national security — it was just competition for Green Hills Crappy Software. It seems that when Dan O’Dowd is threatened by competition, his first reaction is to claim that the competition will kill you. In reality, Green Hills should take a look at their own outdated and dangerous software before pointing fingers at others. Widely used open-source software helps developers find vulnerabilities faster, and hackers can always decompile executables to get the source even when an operating system is closed source. Eventually, Green Hills software caved and now supports Linux fully. When trying to load the whitepaper about how Linux is an urgent threat, you now get this:

Perhaps after the embarrassment of the failed anti-Linux campaign, Dan O’Dowd wised up and realized next time he should create a front organization. That way, it’s more difficult to tie back to the company when things don’t work out.

The Linux campaign was never about safety. It was about Green Hills Software profiting off the backs of taxpayers by spreading misinformation claiming only their products were safe. Decades have passed, but sadly Green Hills tactics haven’t changed one bit.

Hands Covered in Blood

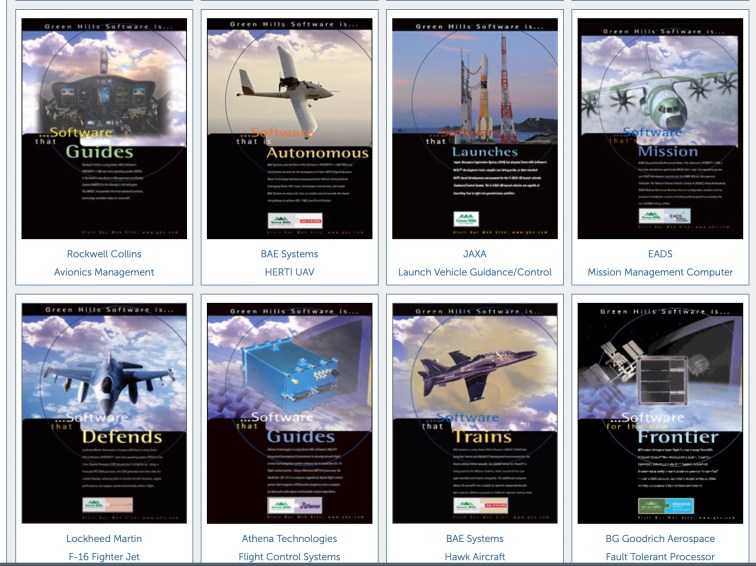

While it’s shocking to see Dan O’Dowd and Green Hills Software put corporate profits over human lives with this anti-competitive smear campaign, O’Dowd has never been afraid to let people die if he can make a few bucks in the process. Check out the customer gallery on Green Hills software’s website for aerospace and defense.

As if O’Dowd hadn’t done enough damage to the world helping legacy auto pollute and cause car crashes, he must have one day realized it would be far more efficient to just kill people directly. As such Green Hills Software became a major supplier to the military helping power nuclear bombers, fighter jets, and unmanned drones. Now, I’m all for patriotism and I love my country but the fact is this software is used to kill people — often including civilians killed accidentally. The nuclear bombers Dan O’Dowd proudly touts are weapons of mass murder that could cause untold devastation in the wrong hands. Hell, it could even be the end of the world.

I find it pretty rich for a man who claims he became a billionaire trading in murder, war and weapons of mass destruction to criticize FSD. I wonder how many people he and his company have killed? I wonder how many were innocent civilians?

Nonetheless, Green Hills military involvement does explain a lot. It explains how Dan O’Dowd can live with himself paying a quarter-million dollars to try to take away life-saving technology from people who depend on it. Who cares if people die so that Green Hills can make a more few bucks on their automotive business before it goes bankrupt? Green Hills military software already helps kill people every day. Sometimes on purpose, sometimes on accident. The violent imagery of the burning of Carthage and “FSD Must be Destroyed” now makes sense too. This guy lives and breathes warfare, and likes to pretend he’s James Bond. He hides from the public eye because he’s ashamed that he’s made money taking lives.

Organizing the Opposition

If Green Hills Software plans to “organize the opposition” against FSD, we plan to organize the opposition against them. First, boycott Green Hills Software and all of its customers. Encourage others to do the same.

Take the time to write to your representatives and tell them we should be using more powerful commercial alternatives rather than getting locked into out-of-date solutions like Green Hills Software. Tell them you think that Defense Appropriation bills should have a clause that mandates modernization and moving away from old solutions like Green Hills.

Don’t buy from legacy automakers. Write to the DMV, write to NHTSA, just like the anti-Tesla cult is doing. Your voices can make a real impact and potentially save someone’s life. What is one life worth to you?

A Paper Billionaire

The Q&A for the Dawn Project includes this Q&A with Dan O’Dowd:

If you have been able to produce software that never fails and can’t be hacked for the last 25 years why aren’t you a billionaire?

I am a billionaire, but my software has been mainly used by the military, and I have kept a low profile until now.

If O’Dowd really is a billionaire, his wealth would be entirely concentrated in the illiquid stock of his privately owned software company. Thus any small changes in the trajectory of the business — such as automotive falling apart — could significantly impact his wealth and ruin his upcoming retirement.

Dan O’Dowd is putting my life at risk

I’m upset about this because Dan O’Dowd is putting my life at risk. I’ve driven 90,000 miles on Autopilot and more than 35,000 miles on FSD Beta. This software keeps me safe every day. It protects the people I love. It stops me from getting speeding tickets.

I used to drive around like a maniac. Now I drive calmly at a reasonable speed on FSD Beta. Yes, I’ve had to disengage many times but I’ve never crashed and nobody else has either. If Dan O’Dowd were to succeed in taking FSD Beta away from me I would be more likely to crash and die. That’s what makes me upset, and it’s well worth pushing back against. We’re not going to let some fool slow down progress so his incompetent software company can try and save their dying business.

Treat Them Better Than They Deserve

Finally, I will close by saying I have no real problem with Dan O’Dowd or the people at Green Hills Software. My problem is with the dangerous ideas they’re spending millions to spread, putting myself, my friends and the public at risk.

When we started writing about Missy Cummings conflict of interest, TSLAQ tried to spin it as “bullying”. In their view, we have no right to participate in our democracy and should have no say in public policy that affects us directly even when there’s a clear conflict of interest and the Federal government agrees. To make our case strongly, we should attack the O’Dowd’s ideas rather than trying to bother him for spreading them.

That means try to be as nice as you can, don’t make anyone at Green Hills Software feel unsafe or uncomfortable or anything like that. Treat them with much more respect than they deserve, but tear their argument to shreds. Share your stories about what you’ve seen actually using FSD, rather than just watching videos about it on YouTube.

Disruption

I struggle to think of a more disruptive force to the auto industry than FSD. Many companies will fight for their life to stop it from being deployed. This is a call to arms for everyone who supports Autopilot and FSD to come together to help spread the word and fight the rampant misconceptions about this technology.

In conclusion, until Dan stops trying to risk our lives for money it is acceptable to call him Dan O’Douche.

Well done Omar! We need more people like you to continue and squash the TeslaQ FUD bs.

Well written and articulated, though I would like to point out that several paragraphs can be twisted by a dedicated TSLAQ staffer and be used to create a damaging narrative to Tesla, like this one:

“When you have to watch your ADAS system closely and disengage every 36 minutes or so, you’re going to stay fairly alert. People don’t want to get into collisions. When something happens often, you’re going to be ready for it. FSD Beta users all over the country are being trained to watch the software and disengage as needed by experience. Their survival instinct is guiding them to stay vigilant. That’s why there have been 0 crashes on FSD Beta, and millions on Green Hills cars. If you got FSD Beta and it worked so well that it could drive 25 days without crashing… but then on the 25th day it would crash… you probably wouldn’t be able to stop it. Your expectations were built over weeks that the software would function perfectly until it didn’t. It may not be obvious, but training people to disengage and spread the word amongst themselves about issues helps make sure the human failsafe is an expert in handling the system.”

They can go like: “Oh, so FSD (beta) is designed to be bad? Oh god fanboys actually paying top dollar for something that is designed… to be bad?? lol lol”

But stick to paragraphs like these, they are so convincing to readers who are not familiar with TSLA and TSLAQ:

“Again we see a deceptive argument designed to confuse people who don’t understand which Full Self-Driving features have shipped today. NONE OF THE FEATURES AVAILABLE TODAY MAKE YOUR CAR DRIVERLESS. FSD will never drive unassisted because it’s IMPOSSIBLE to do so. It can only be used as a driver assistance system.

This latest lie makes it sound like FSD Beta is crashing every day. I’ll remind you once again that to date it has never crashed. They deceive the reader subtly by using the word “unassisted”, alluding to a mode of operation that just doesn’t exist on Tesla vehicles today. Yes, it’s true that FSD Beta would inevitably crash if running driverlessly… which is why that’s not allowed! Once the software has surpassed human level (which will happen faster than you think), that functionality will be enabled. For as long as it can’t drive as well as a human, driverless won’t be enabled… ”

Great expos`e about O’Douche’s anti-Linux shenanigans, btw. That’s really something.

Yes, well done Omar!

While there would have been easier ways to contradict Dan, it seems you have masterfully crushed his thesis into the ground. Good research and excellent argumentation, from a higher standpoint.

All needed now is to go viral and salt his fields utterly. Another ex-billionaire bites the dust, hoist by his own petard.

This article is very eye opening! This makes me remember about a documentary I have watched, where pharmaceutical companies were smear campaigning against local and small farmers to stop the production of healthy foods. And forcing dad to rely upon artificial processed foods, so we get sick frequently and use their medications long term!

And please post this article in video form, so it will be easier for general population to understand in less time. As they may not want to spend more time on reading this long article. So, please convert this into video and make the spreading of this important article easier! Thanks

Thanks for authoring this. Dan is a joke and a fraud — much like most of Teslaq. And we appreciate all that you do for the Tesla community.

This is mind blowing for me that, FSD beta didn’t crashed, but Uber killed a passer by. Yet everyone including professors, Experts are not calling to stop Uber, but FSD?

Also, those professors being biased and supporting a hate group is sad for me..

It is destroying the real reputation of profs, who are real experts, and was supposed to be neutral, unbiased and analyze both sizes with their own academic knowledge.

Corporations don’t care about you. Why you care about a corporate business?

Love that you call him out like this I shared as soon as I finished reading

Don’t Worship Billionaires, Tesla, Elon, who don’t care about you. You shouldn’t support anyone who don’t pay taxes and hoard workers. I believe, need mental treatment. It is called Celebrity/billionaire worship syndrome. Take that as soon as possible.

Well done, and thank you! I urge you to try to win the $10,000, even if it only points out the rules hacking restrictions on what danger qualifies one to enter. Ironically, I bet O’Dowd couldn’t win his own prize by submitting Tesla data, though the challenge is supposedly to point out Tesla’s failings. Clever, but insidious.

I applaud you taking the high road by instructing us to respond to the FUD and anti-Tesla propaganda and “Treat them better than they deserve.” “That means try to be as nice as you can, don’t make anyone at Green Hills Software feel unsafe or uncomfortable or anything like that. Treat them with much more respect than they deserve, but tear their argument to shreds.”

Though I understand your feelings (heck, I share them!) I think you fell into a few unnecessary and harmful ad hominem attacks and used some subjective hyperboles yourself that undercut your otherwise excellent arguments and made them less persuasive. Here’s some quotes and some suggestions for what they’re worth:

Q: “No mention of Tesla, just an UGLY [emphasis mine] site that looked like it was from the 90s with a snake-oil pitch about replacing “all the software that runs the world with software that never fails and can’t be hacked”. and “The UGLY and outdated website says a lot about the company’s level of software and design expertise, but the news highlights and header speak even more loudly.”

I think the above statements would be more powerful without the subjective word “ugly.” Then a reader can’t dismiss them with a shrugged “that’s just his biased opinion.”

Q: “Once again, O’Dowd and Green Hills software prove themselves to be dangerous ignoramuses putting the public at risk to save their dying automotive business.”

I’d cut the ad-hominem “ignoramuses” and also “dying.” (maybe change the latter to “shrinking?”)

Whenever you can, change any ad-hominem statement format to a question, like you did here:

Q: “Is Dan O’Dowd really such a moron that he can’t understand this, or is [he] being paid to make dishonest and deceptive arguments to help save his customers?”

Q: “If they could, they’d be spending millions of dollars on putting out their own version of FSD Beta instead of trying to stop those who are accomplishing what they’re incapable of.”

Change to a question or switch “they’re incapable of” to something like “they haven’t yet been able to do themselves.”

Q: “My guess is that O’Dowd knows his millions of deaths a day claim is nonsense and that nobody has died, but he’s lying in public anyway to please paying customers like MobilEye and Ford. Some people are so sick that corporate profits matter more to them than human lives.”

Second sentence is “Mind reading” so change it to question format or cut. Also the below are problems because you can’t know for certain what someone’s thinking–you can only guess, so do you see how more powerful your suppositions are when you put them in the form of a question instead of a statement?

Q: “Like Cato before him, Dan O’Dowd looked upon Tesla and was shocked by their wealth and their technology. He concluded that what was happening with FSD was dangerous for Green Hills Software and Legacy Auto. And now, he plans to relentlessly call for its destruction to save himself.”

Q: “As if O’Dowd hadn’t done enough damage to the world helping legacy auto pollute and cause car crashes, he must have one day realized it would be far more efficient to just kill people directly.”

Q: “This guy lives and breathes warfare, and likes to pretend he’s James Bond. He hides from the public eye because he’s ashamed that he’s made money taking lives.”

Thanks for standing up for truth and justice and fairness and saving this beautiful green and blue earth. You speak for me, too–probably better than I could.

When we can get the audio for video version of this article? Please. Thanks